-

Pictured (l-r); Dr Adalberto Claudio Quiros, Dr Ke Yuan and Professor John Le Quesne stand in a data centre at the University of Glasgow’s School of Computing Science. (Credit: University of Glasgow)

Pictured (l-r); Dr Adalberto Claudio Quiros, Dr Ke Yuan and Professor John Le Quesne stand in a data centre at the University of Glasgow’s School of Computing Science. (Credit: University of Glasgow) -

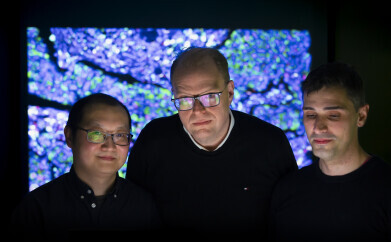

Dr Ke Yuan, Professor John Le Quesne and Dr Adalberto Claudio Quiros standing in front of a projection of one of the cancer sample slides used to train the machine learning algorithm used in the study.

Dr Ke Yuan, Professor John Le Quesne and Dr Adalberto Claudio Quiros standing in front of a projection of one of the cancer sample slides used to train the machine learning algorithm used in the study.

Research News

AI system proves its potential for diagnostic imaging

Jun 19 2024

An international team of AI specialists and cancer scientists have taken biological sample imaging to a new level with a computer system that has learnt the language of cancer and can spot signs of the disease with remarkable accuracy.

Currently, pathologists examine and characterise the features of tissue samples taken from cancer patients on slides under a microscope. Their observations on the tumour’s type and stage of growth help doctors determine each patient’s course of treatment and their chances of recovery.

By harnessing the power of AI the new system, which the researchers call ‘Histomorphological Phenotype Learning’ (HPL), could aid human pathologists to provide faster, more accurate diagnoses of the disease and also provide reliable predictions of patient outcomes.

The researchers, led by the University of Glasgow and New York University, collected thousands of high-resolution images of tissue samples of lung adenocarcinoma taken from 452 patients stored in the United States National Cancer Institute’s Cancer Genome Atlas database. In many cases, the data is accompanied by additional information on how the patients’ cancers progressed.

They then developed an algorithm which used a training process called self-supervised deep learning to analyse the images and spot patterns based solely on the visual data in each slide. The algorithm broke down the slide images into thousands of tiny tiles, each representing a small amount of human tissue. A deep neural network scrutinised the tiles, teaching itself in the process to recognise and classify any visual features shared across any of the cells in each tissue sample.

Dr Ke Yuan, of the University of Glasgow’s School of Computing Science, senior author of the research paper said: “We didn’t provide the algorithm with any insight into what the samples were or what we expected it to find. Nonetheless, it learned to spot recurring visual elements in the tiles which correspond to textures, cell properties and tissue architectures called phenotypes.

“By comparing those visual elements across the whole series of images it examined, it recognised phenotypes which often appeared together, independently picking out the architectural patterns that human pathologists had already identified in the samples.”

When the team added analysis of slides from squamous cell lung cancer to the HPL system, it was capable of correctly distinguishing between their features with 99% accuracy.

Once the algorithm had identified patterns in the samples, the researchers used it to analyse links between the phenotypes it had classified and the clinical outcomes stored in the database. It discovered that certain phenotypes, such as tumour cells which are less invasive, or lots of inflammatory cells attacking the tumour, were more common in patients who lived longer after treatment. Others, like aggressive tumour cells forming solid masses, or regions where the immune system was excluded, were more closely associated with the recurrence of tumours.

The predictions made by the HPL system correlated well with the real-life outcomes of the patients stored in the database, correctly assessing the likelihood and timing of cancer’s return 72% of the time. Human pathologists tasked with the same prediction drew the correct conclusions with 64% accuracy.

The research was expanded to include analysis of thousands of slides across 10 other types of cancers, including breast, prostate and bladder cancers, with the results proving similarly accurate despite the increased complexity of the task.

Co-author Professor John Le Quesne, from the University of Glasgow’s School of Cancer Sciences, said: “We were surprised but very pleased by the effectiveness of machine learning to tackle this task. It takes many years to train human pathologists to identify the cancer subtypes they examine under the microscope and draw conclusions about the most likely outcomes for patients. It’s a difficult, time-consuming job, and even highly-trained experts can sometimes draw different conclusions from the same slide.

“In a sense, the algorithm at the heart of the HPL system taught itself from first principles to speak the language of cancer – to recognise the extremely complex patterns in the slides and ‘read’ what they can tell us about both the type of cancer and its potential effect on patients’ long-term health. Unlike a human pathologist, it doesn’t understand what it’s looking at, but it can still draw strikingly accurate conclusions based on mathematical analysis.

“It could prove to be an invaluable tool to aid pathologists in the future, augmenting their existing skills with an entirely unbiased second opinion. The insight provided by human expertise and AI analysis working together could provide faster, more accurate cancer diagnoses and evaluations of patients’ likely outcomes. That, in turn, could help improve monitoring and better-tailored care across each patients’ treatment.”

Dr Adalberto Claudio Quiros, a research associate in the University of Glasgow’s School of Cancer Sciences and School of Computing Science, is a co-first author of the paper. He said: “This research shows the potential that cutting-edge machine learning has to create advances in cancer science which could have significant benefits for patient care.

Dr Aristotelis Tsirigos and Dr Nicolas Coudray, of New York University’s Grossman School of Medicine and Perlmutter Cancer Centre, are co-senior investigator and co-first author on the paper, respectively. Researchers from New York University, University College London and the Karolinska Institute also contributed to the paper.

The research was supported by funding from the Engineering and Physical Sciences Research Council (EPSRC), the Biotechnology and Biological Sciences Research Council (BBSRC), and the National Institutes of Health.

‘Mapping the landscape of histomorphological cancer phenotypes using self-supervised learning on unlabelled, unannotated pathology slides’, is published in Nature Communications.

More information online

Digital Edition

Lab Asia 31.6 Dec 2024

December 2024

Chromatography Articles - Sustainable chromatography: Embracing software for greener methods Mass Spectrometry & Spectroscopy Articles - Solving industry challenges for phosphorus containi...

View all digital editions

Events

Jan 22 2025 Tokyo, Japan

Jan 22 2025 Birmingham, UK

Jan 25 2025 San Diego, CA, USA

Jan 27 2025 Dubai, UAE

Jan 29 2025 Tokyo, Japan

.jpg)